Local Serverless Development Part 3: Mimic AWS Services Locally with Localstack

This is part 3 in my Serverless mini-series. You can find the previous parts here:

Part 1

Part 2

As I mentioned in the previous post, I’ve been working on a local development environment for a serverless architecture.

In the first post we covered invoking Lambdas locally using the Serverless framework, and we’ve done this for multiple different runtimes.

The second post followed up on this by introducing AWS SAM Local for running our Lambdas locally as an API Gateway, as Serverless Framework didn’t support this.

In this post I’m going to cover setting up and using LocalStack to mimic AWS services locally.

As always I’ve added the code for this post on Github, you can find the code for the series here.

Part 3

Since I created that post, the way to install AWS SAM Local has changed - if you need to install, or need to update from an old version, check the instructions here.

This post will cover how to streamline running things locally - we want minimal installation steps and commands to run things. We’ll also add in LocalStack so that we can add interaction with other AWS services without needing to deploy anything.

First, let’s get LocalStack up and running.

LocalStack mimics AWS services, and runs them on your localhost. Once running, you can interact with them as you would existing AWS services - for example, you can create DynamoDb tables etc through the AWS CLI.

I find this incredibly handy, because like I previously said - if I’m tinkering with the code locally, I don’t want to have to keep deploying services to see if things work, that should come later in the process in my opinion.

You can install LocalStack using pip by running the command pip install localstack

If you don’t have Python or Pip installed, you can follow these instructions to get set up.

Once installed, open a terminal and run the command SERVICES=s3,dynamodb localstack start, or append the --docker option if you want it to run in a container:

NOTE: There’s currently an issue installing LocalStack on Windows - whilst the installation goes fine, you’ll notice you’re unable to run it normally. If you’re on Windows, you can run LocalStack through a container with the command

NOTE: There’s currently an issue installing LocalStack on Windows - whilst the installation goes fine, you’ll notice you’re unable to run it normally. If you’re on Windows, you can run LocalStack through a container with the command docker run -p 8080:8080 -p 4567-4582:4567-4582 -e SERVICES=s3,dynamodb localstack/localstack

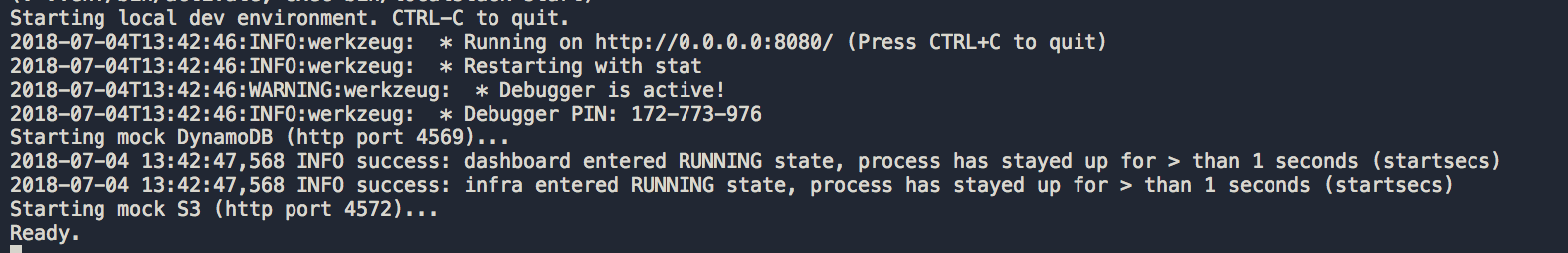

As you can see from the command and output, we have S3 and DynamoDB running on local ports. If you want to check the behaviour of these (I’ve only used some basic operations so far) you can point the AWS CLI to your localhost and run commands. For example, to create a table on LocalStack:

aws --endpoint-url=http://localhost:4569 dynamodb create-table --table-name MusicCollection --attribute-definitions AttributeName=Artist,AttributeType=S AttributeName=SongTitle,AttributeType=S --key-schema AttributeName=Artist,KeyType=HASH AttributeName=SongTitle,KeyType=RANGE --provisioned-throughput ReadCapacityUnits=5,WriteCapacityUnits=5

You can also open up localhost:8080 in your browser to get a visual dashboard of the AWS services running, including any created components.

You can skip setting the services if you want to run all available services, I’ve simply limited it in the command to speed things up as starting ElasticSearch up can be slow, and my example code will only use S3 and DynamoDB.

Now we have LocalStack available, we can add some code to interact with AWS into our Lambdas. In the HelloPython Lambda I’ve added the ability to check an S3 bucket exists, create it if it doesn’t, then check an object exists, and create it if it doesn’t. It will then return the content of that object:

import boto3

import os

def hello(event, context):

AWS_ACCESS_KEY_ID = os.getenv('AWS_ACCESS_KEY_ID')

AWS_SECRET_ACCESS_KEY = os.getenv('AWS_SECRET_ACCESS_KEY')

ENDPOINT_URL = os.getenv('S3_ENDPOINT_URL')

my_bucket_name="ians-bucket"

my_file_name="plan_for_world_domination.txt"

s3_client = boto3.client('s3',

endpoint_url=ENDPOINT_URL,

aws_access_key_id=AWS_ACCESS_KEY_ID,

aws_secret_access_key=AWS_SECRET_ACCESS_KEY)

create_bucket(s3_client, my_bucket_name)

create_file(s3_client, my_bucket_name, my_file_name)

response = {

"statusCode": 200,

"body": get_file_body(s3_client, my_bucket_name, my_file_name)

}

return response

def create_bucket(s3_client, bucket_name):

all_buckets = s3_client.list_buckets()

create_bucket = True

for bucket in all_buckets['Buckets']:

if bucket['Name'] == bucket_name:

create_bucket = False

break

if create_bucket:

print("Bucket doesn't exist - creating bucket")

bucket = s3_client.create_bucket(Bucket=bucket_name)

def create_file(s3_client, bucket_name, file_name):

all_files = s3_client.list_objects(

Bucket=bucket_name

)

create_file = True

if 'Contents' in all_files:

for item in all_files['Contents']:

if item['Key'] == file_name:

create_file = False

break

if create_file:

print("File doesn't exist - creating file")

response = s3_client.put_object(

Bucket=bucket_name,

Body='just keep blogging and hope for the best!',

Key=file_name

)

def get_file_body(s3_client, bucket_name, file_name):

retrieved = s3_client.get_object(

Bucket=bucket_name,

Key=file_name

)

file_body = retrieved['Body'].read().decode('utf-8')

print(file_body)

return file_body

And in the GoodbyePython Lambda I’ve added the ability to write to a DynamoDb table:

import json

import os

import boto3

def goodbye(event, context):

AWS_ACCESS_KEY_ID = os.getenv('AWS_ACCESS_KEY_ID')

AWS_SECRET_ACCESS_KEY = os.getenv('AWS_SECRET_ACCESS_KEY')

ENDPOINT_URL = os.getenv('DYNAMO_ENDPOINT_URL')

client = boto3.client('dynamodb',

endpoint_url=ENDPOINT_URL,

aws_access_key_id=AWS_ACCESS_KEY_ID,

aws_secret_access_key=AWS_SECRET_ACCESS_KEY)

my_table_name = "ians-table"

my_attribute_name = "ians-key"

my_key_value = "world domination plan"

create_table(client, my_table_name, my_attribute_name)

create_item(client, my_table_name, my_attribute_name, my_key_value)

response = {

"statusCode": 200,

"body": "Database is up to date!"

}

return response

def does_table_need_creating(dynamo_client, table_name):

all_tables = dynamo_client.list_tables()

create_table = True

for table in all_tables['TableNames']:

if table == table_name:

create_table = False

break

return create_table

def create_table(dynamo_client, table_name, attribute_name):

if does_table_need_creating(dynamo_client, table_name):

print('Table not found - creating table')

dynamo_client.create_table(

AttributeDefinitions=[

{

'AttributeName': attribute_name,

'AttributeType': 'S'

},

],

TableName=table_name,

KeySchema=[

{

'AttributeName': attribute_name,

'KeyType': 'HASH'

},

],

ProvisionedThroughput={

'ReadCapacityUnits': 123,

'WriteCapacityUnits': 123

}

)

def does_item_need_creating(dynamo_client, table_name, attribute_name, key_value):

get_item_response = dynamo_client.get_item(

TableName=table_name,

Key={

attribute_name: {

'S': key_value

}

}

)

create_item = True

if 'Item' in get_item_response:

create_item = False

return create_item

def create_item(dynamo_client, table_name, attribute_name, key_value):

if does_item_need_creating(dynamo_client, table_name, attribute_name, key_value):

print('Item not found - creating item')

item_response = dynamo_client.put_item(

TableName=table_name,

Item={

attribute_name: {

'S': key_value

}

}

)

As you can see in each of these, the URL for the AWS services is being retrieved from the environment variables, as was demonstrated in the last post. This is so that when running locally, we can run against LocalStack, but when deploying the API to different stages, we can easily swap out the environment variables to files containing the appropriate addresses for running services.

Because AWS SAM runs things in a container itself, you’ll need to use the Docker DNS - on Windows that’s docker.for.win.localhost and on Mac it’s docker.for.mac.localhost.

Now we can run everything locally, fully running our Lambdas as an API Gateway locally and even interacting with AWS services (in the form of LocalStack), and we’ve also got an easy way to deploy everything to different stages in AWS, swapping our environment variables as appropriate.

So the sequence of events to get things running locally is:

- Run

serverless sam export -o template.ymlin the folder containing the lambdas, to make sure your template file is up to date with all environment variables - Run LocalStack with

SERVICES=s3,dynamodb localstack startordocker run -p 8080:8080 -p 4567-4582:4567-4582 -e SERVICES=s3,dynamodb localstack/localstackto start up LocalStack with S3 and DynamoDB available - In a separate terminal, run

sam local start-apiin the folder containing the lambdas - Hit the endpoints

localhost:3000/helloandlocalhost:3000/goodbyeto invoke our Lambdas and interact with LocalStack

Unfortunately, because both AWS SAM Local and LocalStack are blocking processes, we can’t have a single command to run everything locally - and I don’t like that.

In the next post I’ll cover running AWS SAM Local and LocalStack in containers so that we can spin everything up with a single command.

As always, you can find the code for this post here.